AI-generated summary

Physical Artificial Intelligence (AI), or Embodied AI, extends beyond robots and autonomous machines to deeply influence human perception and identity through visual and bodily representations. Nuria Oliver, AI expert and co-founder of the ELLIS Alicante Foundation, highlighted at the Bankinter Innovation Foundation’s Future Trends Forum how AI-driven generative models and beauty filters perpetuate harmful aesthetic biases. These biases reinforce stereotypes around race, gender, and social status, often without transparency or user awareness, affecting mental health, diversity, and self-image. For example, AI-generated images of “CEOs” or “criminals” tend to reflect prejudiced, Eurocentric, and racialized portrayals rooted in historical data, amplifying societal inequalities. Beauty filters, widely used by teenagers, homogenize facial features to fit undefined beauty standards, contributing to low self-esteem and distorted body perception.

Oliver emphasized that physical AI is not just a technical evolution but a cultural and social phenomenon that shapes how we see ourselves and others in digital environments dominated by unregulated platforms. She called for urgent regulatory frameworks ensuring transparency, traceability, and independent auditing of AI systems. Beyond governmental action, Oliver advocates for collective societal engagement and digital literacy to empower users to critically understand AI’s impact on identity and aesthetics. Her perspective broadens Embodied AI discussions to include ethical, psychological, and cultural dimensions, underscoring AI’s profound influence on social bodies and human values.

Nuria Oliver warns at the Future Trends Forum about how physical AI reinforces stereotypes and affects mental health, especially among young people

The Physical Artificial Intelligence (Embodied AI) It is no longer just a matter of factories, operating rooms or robots that move around the world. It is also in our pockets, in the filters of our selfies and in the images that algorithms generate to define, for example, what a genius, a CEO or an “attractive” person should look like. In this new scenario, where the physical is also the visual and the bodily, AI is shaping our perception of reality… and ourselves.

During her speech at the Bankinter Innovation Foundation’s Future Trends Forum , AI expert and co-founder of the ELLIS Alicante Foundation, Nuria Oliver, issues a clear warning: aesthetic biases in artificial intelligence are reinforcing harmful stereotypes and already affecting mental health, diversity and self-image. Most worryingly, this phenomenon occurs without transparency, without regulation, and often without users being aware of it.

The forum brought together 40 international experts to discuss the technological, economic and social implications of physical AI (Embodied AI). A concept that not only refers to autonomous systems that operate in the real world, but also to those that have a direct impact on the human body, such as facial representation algorithms. In this context, Oliver’s intervention opens up a critical line that has been little explored: the way in which AI represents the human and what that says about us.

The face of a CEO, of a genius… or a criminal

In her speech, Nuria Oliver shows examples generated with the AI tool MidJourney to illustrate the extent to which current generative models are impregnated with biases. Using simple prompts such as “president,” “CEO,” “criminal,” or “genius,” the system produced images that repeated predictable aesthetic and social patterns: white, middle-aged men with Eurocentric features for prestigious professions; racialized people for negative categories.

He did not add specific descriptions. He only wrote one word. However, AI filled in the gap with stereotypes learned from the data. The image of the “president” seemed to be taken from an American rally; that of a “nurse”, anchored in the iconography of the 50s. According to Oliver, this shows that generative models not only reflect the world as it is, but as it has been historically represented, amplifying gender, race, and class biases.

This type of AI, Nuria explains, is not neutral. It is a technology that learns from the past to imagine the present. And that has consequences. Because the more these tools are used in education, communication, personnel selection or entertainment, the more biased representations that influence how we perceive each other are reinforced.

The Filter of Perfection: Bias and Mental Health

More than 90% of teenage girls use beauty filters before uploading a photo to social media. This data is one of the most forceful of Nuria Oliver’s intervention, who focuses a large part of her presentation on analysing the impact of filters generated by artificial intelligence on self-image, especially among young people.

These filters – designed without clear criteria and applied by default on many platforms – act as a kind of “automatic Photoshop”, adjusting facial features according to beauty canons that no one has publicly defined. Who decides what is beautiful? What parameters are used? What type of face is prioritized? According to Oliver, there is no transparency or regulation. And yet, their use is shaping the dominant visual standard.

To illustrate her impact, Nuria showed her own image: an original selfie and two versions with filters applied. The difference was obvious. The AI smoothed the skin, refined the nose, enlarged the eyes. It was still her, but converted into an “improved” version according to criteria that no one knows. This subtle but constant alteration is having real consequences: disorders of body perception, low self-esteem, aesthetic pressure.

But Oliver does not stop at the anecdotal. ELLIS Alicante has developed tools to technically analyse these filters, beyond the social debate. With the OpenFilter platform and the FairBeauty and B-LFWA datasets, his team has been able to apply beauty filters to images from open databases and study their effect from a scientific perspective: do they make people more attractive? Do they reduce facial diversity? Do they affect the ability to recognize?

The answers, based on his research published at conferences such as NeurIPS and in journals such as Royal Society Open Science or Social Media + Society, confirm what many intuited: filters work, yes. 96% of the faces were perceived as more attractive after application. But they also homogenize features, reduce diversity, and alter the perception that others have of the person portrayed.

The problem is not that we “improve” an image. The problem is that all the improvements point in the same direction. And that, according to Oliver, is a form of bias that directly affects our mental health and the visual richness of our society.

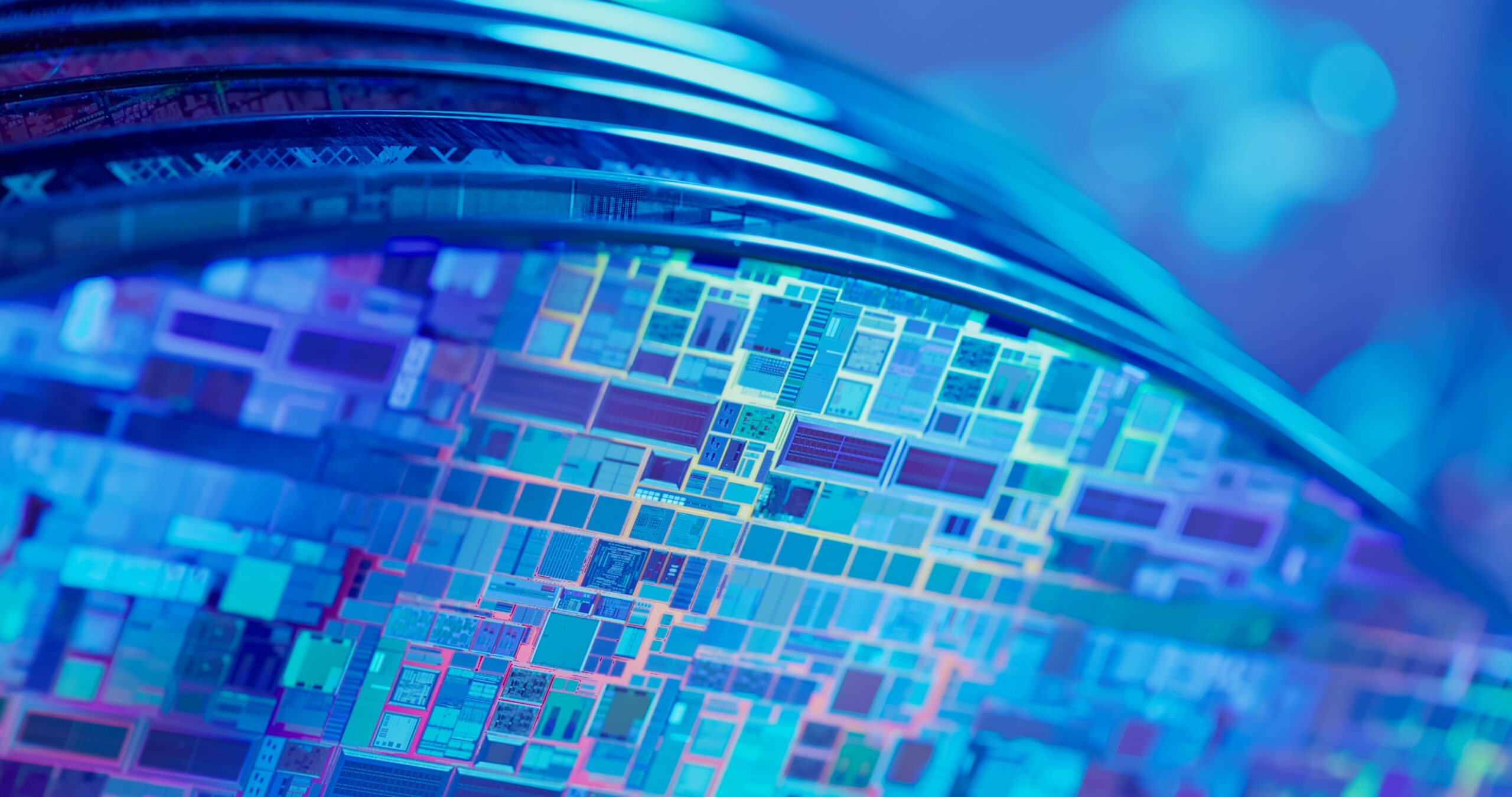

Physical AI: A Revolution That Is Also Cultural

Although most conversations about physical AI focus on robots, sensors or smart devices, Nuria Oliver proposes a different perspective: physical AI is also that which acts directly on our image, our skin, our face. Because when algorithms modify how we see ourselves – and how they see us – they are interacting with the body, even if they don’t touch it.

This type of AI, Nuria explains, is part of the same transformation that we are seeing in autonomous systems or intelligent assistants. The difference is that their impact is more subtle, more everyday and more personal. It crosses us in our leisure moments, in our publishing habits, in the filters we apply without thinking. And it does so from a cultural base: it trains with data loaded with history, discrimination, social standards.

Oliver stresses that we live in an environment dominated by social networks and unregulated platforms, whose influence is already comparable to – or greater – than that of governments. Services that did not exist two decades ago today condition the perception of beauty, the dominant aesthetic canon and the public representation of identities. Four of the six most populous “countries” in the world, he said, have no president, no constitution, and no democracy. They are platforms.

In this context, physical AI imposes an urgent need for cultural reflection. What does it mean to be visible in an environment dominated by algorithms? Which bodies are validated and which are hidden? How do these tools condition our idea of the human?

Oliver’s conclusion is clear: physical AI cannot be understood only as a technical evolution. It is also a social and cultural phenomenon. And as such, it must be analyzed, regulated and, above all, explained.

Regulation, transparency and collective action

Nuria Oliver does not limit herself to diagnosing a problem. It also makes a clear call to action. As chair of the Transparency Group for the development of the Code of Conduct on general-purpose AI models under the AI Act, and a member of the supervisory board of the Spanish Agency for the Supervision of Artificial Intelligence, Oliver knows first-hand the regulatory challenges posed by this technology.

In his speech, he strongly defends the need for transparency and governance. “We don’t know who sets the beauty standards in the filters. We don’t know what data trains generative models. And we don’t have the tools to audit or question their decisions,” he warns. This void leaves the user in a position of total vulnerability to systems that influence their identity, without being aware of it.

The approach cannot be solely technical. Oliver insists that we need clear public policies, regulatory frameworks that guarantee the traceability of algorithms and independent auditing mechanisms. It also underlines the importance of open research and the development of tools such as OpenFilter, which make it possible to objectively assess the behaviour of commercial filters without violating users’ privacy.

But the solution does not lie only in governments or technology companies. Collective action is needed, he says. Society needs to be informed and participate in this debate. Critical digital literacy is required, especially among younger people, so that they understand what is behind the perfect image they see on their screens. Because trust in artificial intelligence is built from understanding and participation.

And here, the Bankinter Innovation Foundation – and forums such as the Future Trends Forum – have a key role: to create spaces where technological innovation is analysed with rigour, a critical vision and a transformative vocation.

A different look at physical AI

Nuria Oliver’s intervention at the Future Trends Forum does not talk about walking robots or articulated arms on assembly lines. It speaks of another form of physical artificial intelligence: the one that molds faces, builds stereotypes and acts directly on our digital identities. An AI that, without a mechanical body, has a profound effect on social bodies.

This approach broadens the conversation about Embodied AI. It forces us to look beyond hardware and consider the cultural, psychological, and ethical implications of systems that affect how we see ourselves and how others see us. Because algorithms mobilize ideas. They decide what is beautiful, what is desirable, what is professional, what is normal.

That is the view that Oliver provides: the one that connects AI and society, technology and emotion, data and rights. A necessary look to anticipate the futures we are building with each selfie, each prompt and each filter.

At the Bankinter Innovation Foundation we will continue to promote this conversation. Because AI, in addition to being a matter of efficiency or productivity, is also a matter of humanity.

Directora Data Scientist en Data Pop Alliance