AI-generated summary

Artificial intelligence (AI) is rapidly transforming business operations and customer interactions, with adoption rates rising from around 50% to 72% recently. However, the fast development of advanced AI models presents challenges in control, privacy, and personalization, especially in sensitive sectors like healthcare, finance, and education. To address these concerns, many organizations are turning to on-premises, open-source Large Language Models (LLMs) instead of relying on cloud-based services. On-premises models run on internal servers, providing greater data security, reduced latency, and enhanced customization tailored to specific business needs.

These local AI solutions enable companies to leverage their own up-to-date data for tasks such as natural language analysis, content generation, and informed decision-making through Retrieval Augmented Generation (RAG). They integrate seamlessly with internal systems, improving customer service via intelligent chatbots and personalized virtual assistants that access real-time data like purchase history and preferences. On-premises LLMs also adapt by learning from past interactions, offering a competitive advantage through personalized marketing and educational tools while maintaining compliance with strict data regulations such as GDPR. Although initial investments in hardware and setup are required, the long-term cost savings and regulatory benefits make on-premises AI a strategic choice for organizations seeking autonomy, efficiency, and enhanced data privacy in a rapidly evolving technological landscape.

One of the most emerging trends in artificial intelligence will allow its capabilities to be extended in all types of organizations, regardless of size

Artificial intelligence (AI) is revolutionizing the way businesses operate and interact with their customers. A McKinsey report reveals that, in the last six years, the adoption of this technology by organizations has remained at around 50%. This year, however, its adoption has increased to 72%. The breakneck advancement of “smart” models poses significant challenges, especially for organizations seeking more control, privacy, and personalization in their technology solutions.

These are areas as diverse as healthcare, finance, marketing, education or manufacturing, where sensitive data is a constant concern. In this context, local and custom models have become a trend that promises to transform the way organizations harness the power of AI.

Most solutions in commerce rely on cloud services, where business data is processed on external servers. However, this dependency has notable disadvantages, such as the absence of direct control over data security, latency in processing, and lack of deep customization. On the other hand, on-premises models, which are installed and run on internal servers, offer an alternative that mitigates these problems.

Specifically, the installation of an open-source Large Language Model (LLM) in an on-premises environment makes it possible to avoid dependence on an external service, guaranteeing full control over data privacy and security, a crucial aspect for companies that handle sensitive information. This configuration eliminates the need for Internet connectivity, considerably reducing response times and increasing operational efficiency – a decisive advantage in a rapidly evolving market where speed in information processing is key.

Improve personalization and adapt them to needs

An on-premise LLM also offers the possibility of customization to the specific needs of the company. This flexibility makes it a powerful tool for various purposes, such as natural language analysis, which can be used to gain learnings and insights from unstructured data; content generation, where the model can automatically create high-quality texts; and support in business decision-making, providing data-driven predictions.

All this is based on knowledge that does not come from generic training data, but from reliable and up-to-date business data, which the model can consult in real time to implement what is called Retrieval Augmented Generation (RAG), or generation powered by information retrieval. An example of this is an AI model that assists legal teams, automatically consulting relevant legislation or contracts while writing reports.

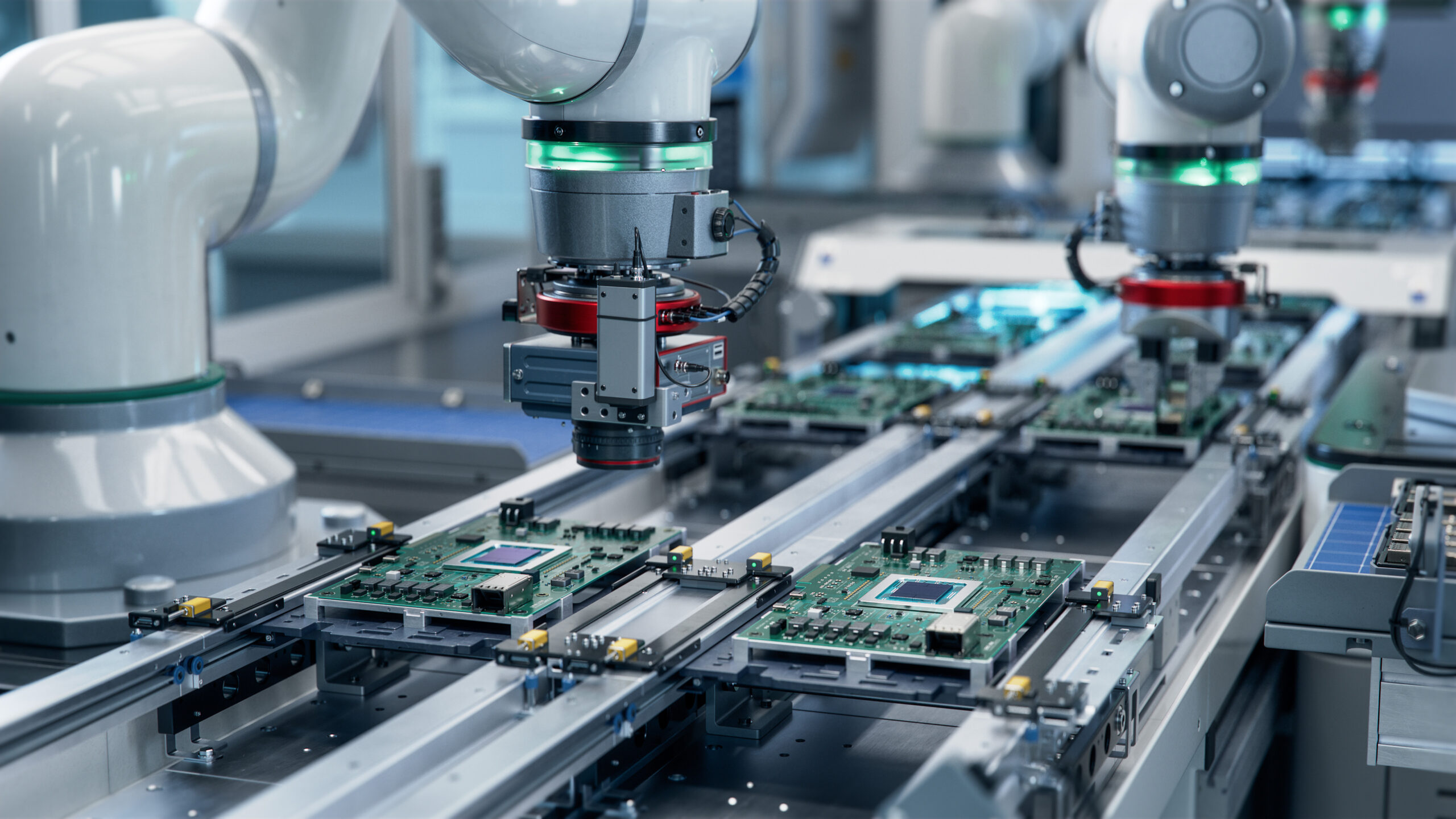

In addition, an on-premise LLM allows for greater integration with internal systems and processes. Businesses can incorporate it into their existing software and workflows, streamlining operations and improving customer engagement through intelligent chatbots and personalized virtual assistants. These assistants not only understand users’ questions, but also access internal data, such as purchase histories or preferences, to offer quick and targeted solutions.

Access to the history or adaptation of the answers as advantages

In fact, an on-premise model can learn from previous customer interactions to provide more relevant answers, as well as manage unique internal processes using real-time data analytics. The ability of an LLM to learn and adapt to the specificities of an industry or a company is a great competitive advantage.

Personalization is key, especially in marketing. With on-premises models, businesses can analyze demographic and behavioral data to create hyper-personalized campaigns that increase conversion. Similarly, educational institutions can deploy local AI models to analyze student data, identify areas for improvement, and deliver content tailored to their specific needs, while maintaining data privacy.

Another important aspect is the reduction of costs in the long term. While the hardware, installation, and configuration of an on-premises LLM may require an upfront investment, over time the total cost can be significantly lower compared to cloud-based services, especially when considering the expenses associated with bandwidth and data traffic.

Finally, using an LLM in an on-premises environment allows you to meet strict regulatory and compliance standards and ensures compliance with regulations such as the General Data Protection Regulation in Europe, which requires transparent and secure handling of personal data. This is particularly important in regulated sectors such as finance and healthcare, where data management is subject to strict guidelines. Therefore, having full control over AI models and data storage and processing is essential.

The use of open source models allows organizations to experiment and develop applications without the high costs associated with commercial licensing. In addition, open source communities foster innovation by sharing advances and best practices. While transitioning to these models requires investment and planning, the long-term strategic benefits make it worthwhile. In a world where technology evolves rapidly, betting on local solutions is a step towards autonomy and operational excellence.