AI-generated summary

Quantum technology, rooted in the principles of quantum physics studied since the early 20th century, is rapidly evolving from theoretical research to practical, commercial applications. Unlike classical computing, which relies on bits representing 0 or 1, quantum computing uses qubits that can exist in multiple states simultaneously, enabling far more complex and efficient data processing. This technology exploits atomic-level quantum phenomena, requiring extreme operational conditions such as near absolute zero temperatures to minimize errors. Advances in quantum technology are making it increasingly accessible, particularly for cutting-edge companies, and it is anticipated to become a significant part of everyday life, albeit likely through remote access due to its specialized requirements.

Quantum computing promises to revolutionize numerous fields by handling vast data volumes and complex simulations that classical computers struggle with. For example, it can drastically accelerate drug discovery by analyzing an enormous number of molecular combinations, enhance artificial intelligence training efficiency, and improve simulations of natural and engineered systems like weather patterns and engine gases. As global data generation expands exponentially, reaching hundreds of zettabytes, classical computing approaches their limits. Quantum computing offers a solution by processing data at unprecedented speeds, effectively transforming our capacity to manage Big Data. This advancement heralds a new era where the explosion of information and computational power reshapes science, technology, and industry.

This great innovation is focused on solving complex problems that traditional computers are not capable of doing. Unraveling its main characteristics will allow you to catch up, since in a few years it may be common to use it in different areas of life

Quantum technology is receiving more and more attention, and no wonder. The quantum physics involved in this new calculation process is becoming better understood, and thanks to advances in other fields, quantum computers are rapidly falling in cost. What was once merely theoretical and went through a laboratory phase is becoming established as commercial technology. It is possible that in a few years it will be common to use quantum technology in different areas of day-to-day life, although probably remotely due to the particular conditions required to operate.

What is quantum technology?

Quantum technology is all those technical fields that take advantage of the quantum qualities of reality to function. To understand this, you can think of masonry buildings as gravitational technology, because it is the mass of the brick and its weight that holds the facades together. This quantum technology works on properties considered exotic because they are not perceived with the naked eye, they operate at atomic levels.

Why is there so much talk about quantum physics?

Far from being something recent, quantum physics has been studied since 1900 – in 1918, Planck received the Nobel Prize in physics for the creation of the branch of study of quantum mechanics – although it is now much higher technological level and applications that are reaching the day to day. That is why we talk more and more frequently about quantum computing, quantum light emission, quantum communications, etc.

The Age of Quantum Computing

Until a few decades ago, almost all quantum studies were theoretical. Not because they are not demonstrable, which many do too, but because human technique was not up to the task of manipulating this reality. Although this has already happened to other branches of computing.

In the middle of the last century, now-classical computing bordered on magic to the point that Gordon Moore discovered a law that indicated that every few months computing power was going to double. And it was fulfilled. It is still true, in fact, although it is increasingly difficult to compress transistors in the physical and finite space of a processor.

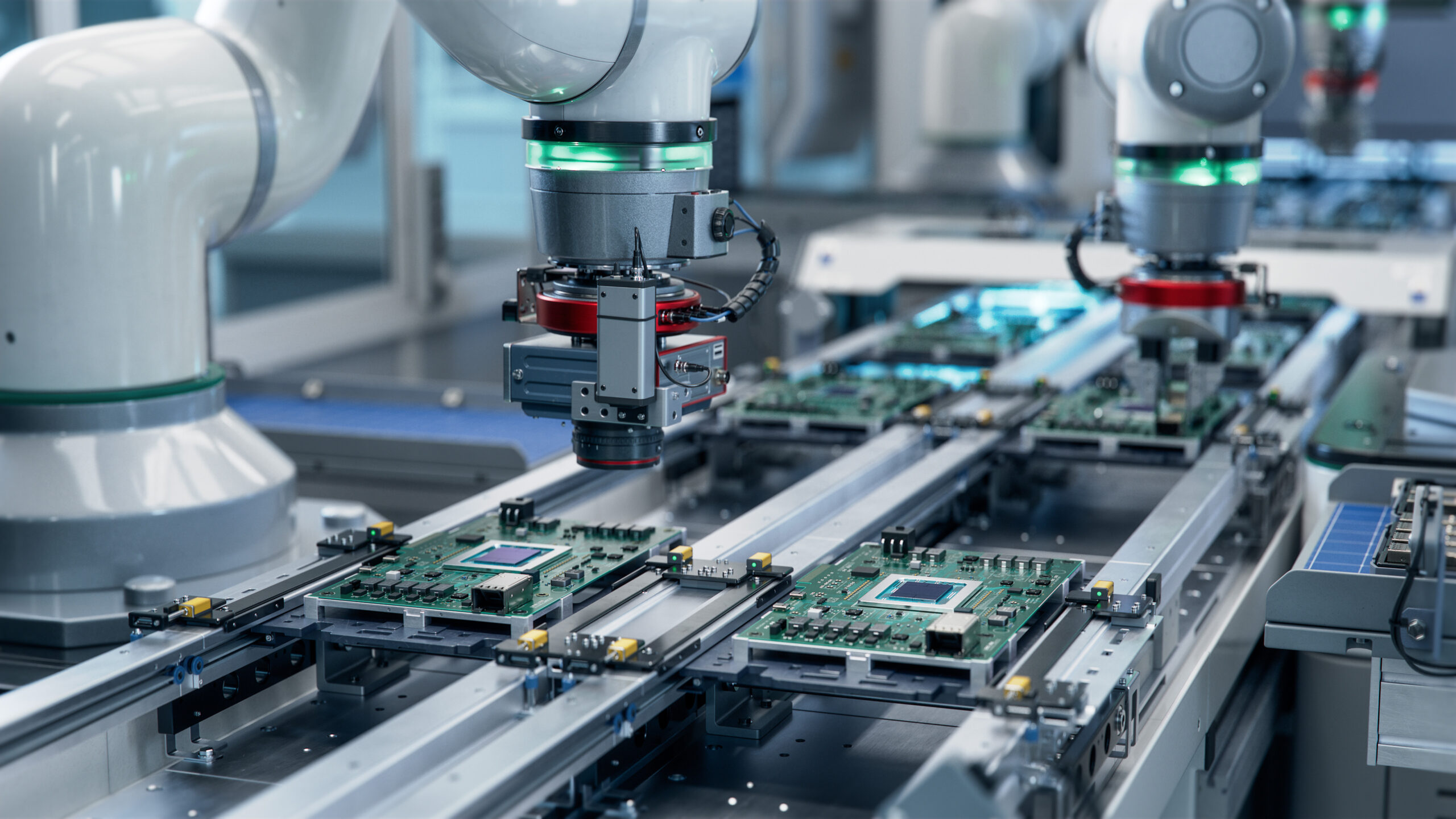

This classical computing is exhausting its avenues for improvement, so quantum computing is positioning itself as the future relay of information processing. Advances in this branch of study are increasing, and quantum processors have long since left the laboratory to make their attempts as a commercial technology. Until now, only in large companies that are very technologically cutting-edge.

How does a quantum computer work?

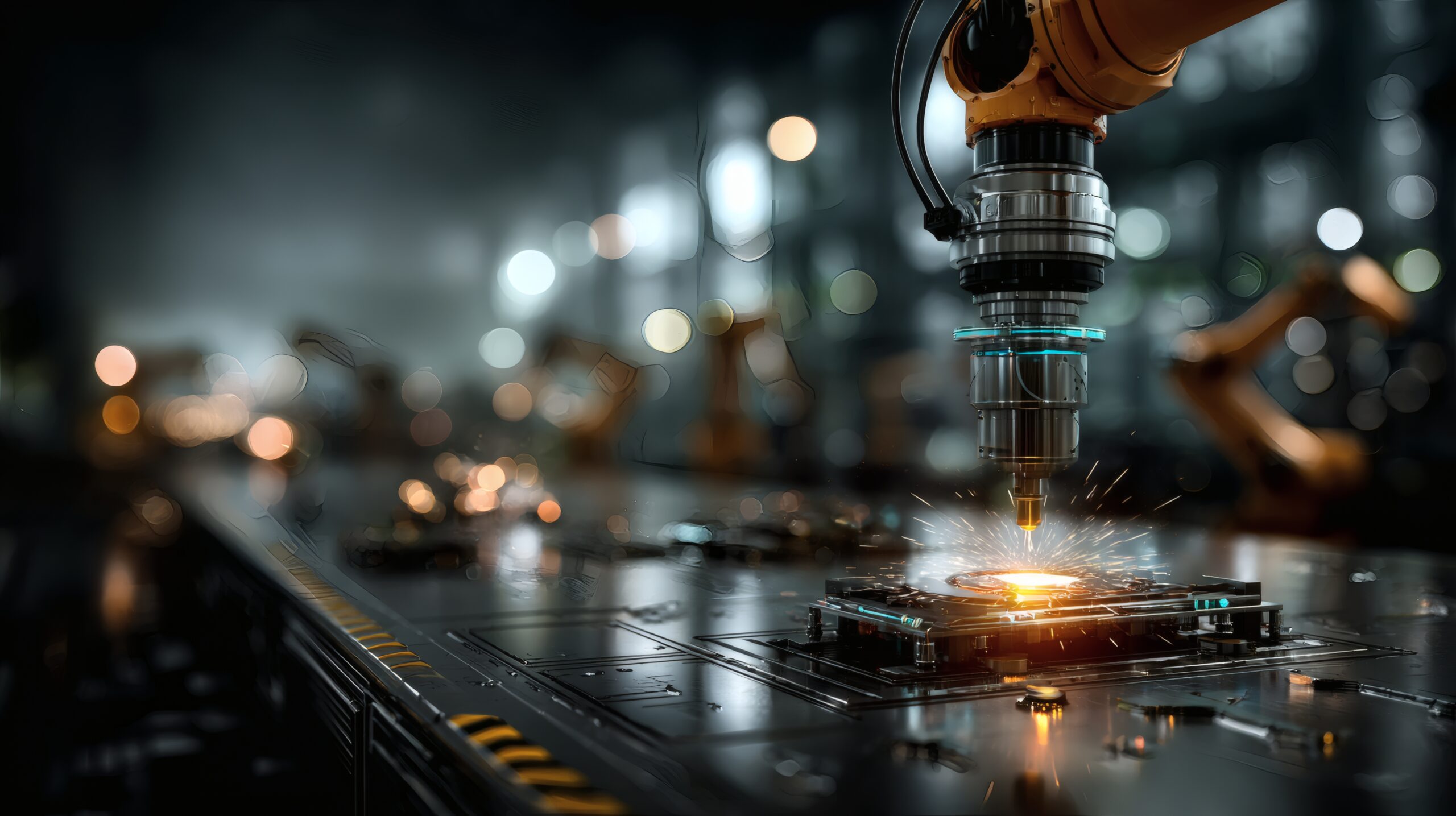

A quantum processor is one capable of harnessing the phenomena of quantum mechanics to work with information. Just as the conventional computer works with bits of information (0 or 1) recorded in physical structures such as silicon, the quantum computer works with qubits, a type of unit capable of storing continuous values or performing advanced mathematical operations. For example, a qubit can store overlapping values at the same time, unlike the bit.

Although they are far superior in fundamental aspects, working with such complex systems involves enormous errors in the face of minimal variations in working conditions. That’s why quantum computers are often cooled to temperatures of -273 °C, very close to absolute zero, and require state-of-the-art decontamination protocols.

Why are quantum computers the future?

There are many bottlenecks in today’s computing that make quantum computers indispensable:

For example, when drugs are analyzed, there are three stages of study: in vivo, the first historically discovered, of application of the drug to living beings; in vitro, on cells in the laboratory; and in silico, by means of numerical calculations in computers. While all steps are necessary, the last two have accelerated drug discovery. But there are more than 1060 possible combinations of the compounds we know, and analyzing the properties of such a volume without quantum computing would mean never discovering them.

Another application of quantum technology is to speed up training processes for artificial intelligences, which are increasingly used in all fields. Training an AI is a costly process both in terms of resources (mainly energy) and time. But quantum technology can help shorten them significantly.

In general, any type of simulation, such as the simulation of complex models such as the weather or gases inside engines, requires a volume of computing that conventional computers get stuck with. Quantum is already beginning to be used to understand systems such as oceanic-atmospheric.

Also in more specific and advanced applications such as the calculation of highly fuel-efficient spatial routes, the molecular design of materials for very specific uses or even logistics management.

More data per unit of time

The key to quantum computing, in an extraordinarily summarized way, is that it is able to work with a higher volume of data in less time. It’s like stepping on the accelerator pedal or having discovered the wheel. Humanity’s use of data has remained relatively stable for millennia, but for several centuries it has not stopped increasing.

If in 2010, Humanity had generated two zettabytes of information (one billion gigabytes), in 2015 there were 16 and in 2020 we had reached 64. It is estimated that we will close 2025 with more than 180 zetabytes, a volume of data that conventional computers cannot continue to handle with classical chips. Although it may seem paradoxical, quantum computing will not solve these growing pains but will make this exponential growth seem as linear as from our perspective we observe the data of the fifteenth century.

There is already talk of the Big Bang of Big Data or Big Bang Data, derived in part from the application of Moore’s law and in part from the processing capacity of quantum computers. If humanity now generates this volume of information, imagine what we will create with machines capable of handling larger volumes.