AI-generated summary

Neuromorphic computing, a concept introduced by Carver Mead in the 1980s, aims to design electronic systems inspired by the human brain’s neural architecture. This approach leverages the brain’s efficiency and adaptability to develop computing systems that differ fundamentally from traditional processors. Neuromorphic technology encompasses specialized hardware, such as Intel’s Loihi and IBM’s TrueNorth chips, which mimic neural networks, and software models like artificial neural networks and deep learning algorithms that replicate brain-like learning and adaptation.

Despite promising advances, neuromorphic computing faces key challenges including the complexity of accurately replicating brain functions, scalability limitations, efficiency concerns, and sensitivity to failures. Integration into existing technologies and large-scale applications remains difficult. Nonetheless, ongoing research and development are expanding its practical uses, particularly in robotics and artificial intelligence, enabling improved autonomy, pattern recognition, and real-time decision-making. Leading companies like Intel, IBM, and Qualcomm, along with research institutions such as Caltech, MIT, and Stanford, are at the forefront of this field.

Looking ahead, neuromorphic computing is poised to revolutionize intelligent technologies by enhancing machine efficiency and adaptability, potentially transforming industries through smarter automation and more intuitive human-machine interfaces. This interdisciplinary fusion of neuroscience and computing promises a future where machines not only emulate but also learn from natural brain processes, driving innovation in both technology and our understanding of cognition.

Neuromorphic computing represents an exciting crossover between technology and biology, a frontier where computer science meets the mysteries of the human brain. Designed to mimic the way humans process information, this technology holds the promise to stir a revolution everywhere, from artificial intelligence to robotics. But what exactly is neuromorphic computing and why is it taking the center stage?

Origins and evolution of neuromorphic computing

The concept of neuromorphic computing was first coined in the 1980s by the scientist Carver Mead, who proposed creating electronic systems inspired by the neural structure of the human brain. This idea was based on the premise that the brain is, in essence, an extremely efficient and versatile information processor. Since then, neuromorphic computing has evolved considerably, leveraging advances in neuroscience, engineering and, especially, artificial intelligence.

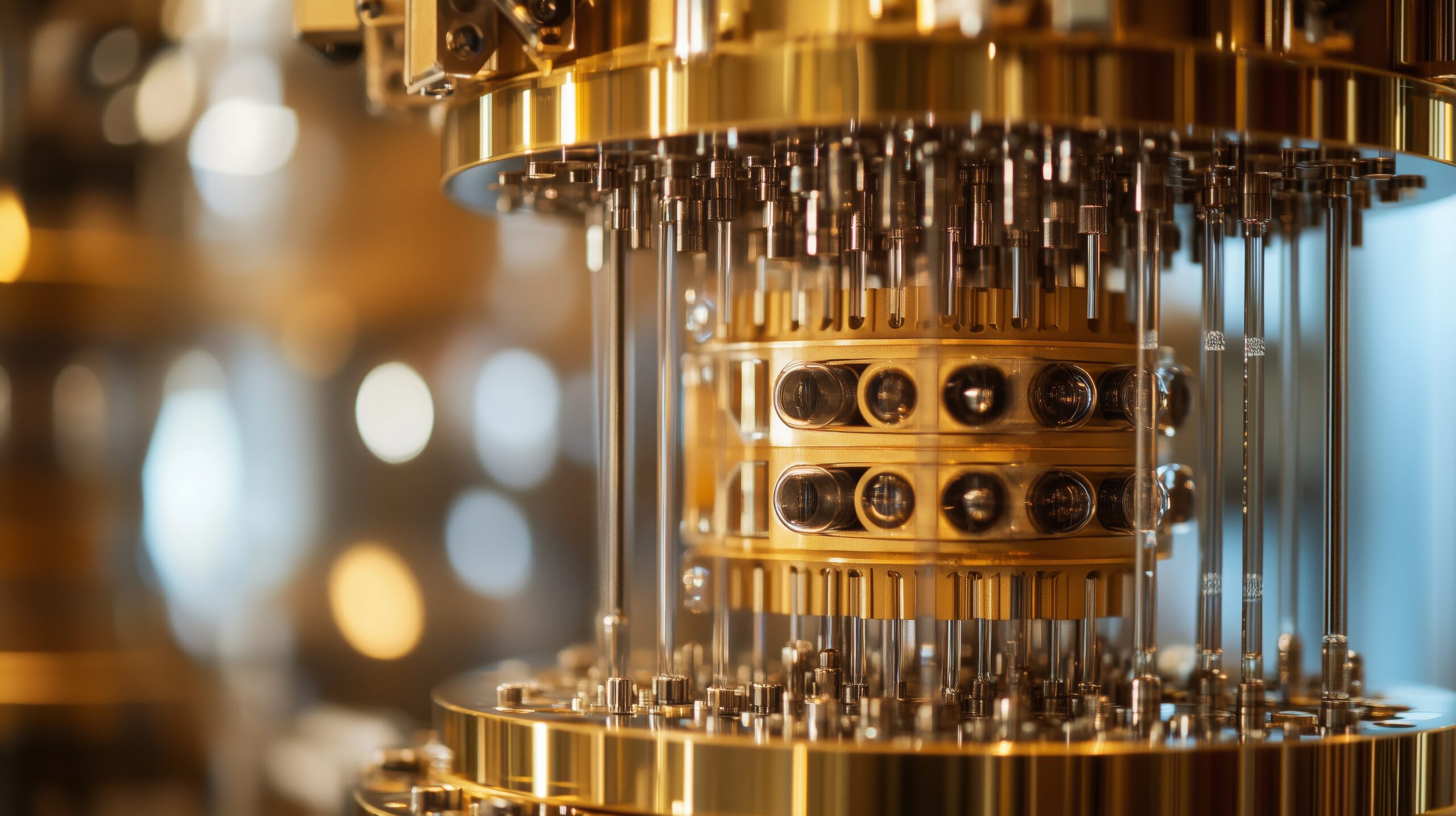

Key technologies in neuromorphic computing

Neuromorphic computing relies on two fundamental technological pillars: hardware and software. On the hardware side, specific neuromorphic chips are developed, such as Intel’s famous Loihi chip, now in its second generation, designed to mimic the structure and functioning of biological neural networks. These chips use a radically different architecture from traditional processors, allowing more efficient and adaptive processing.

In terms of software, there are algorithms and computational models in development that seek to replicate aspects of learning and brain processing, such as artificial neural networks and deep learning. These models are inspired by the structure of the brain and its learning and adaptation mechanisms.

Current challenges in neuromorphic computing

Despite its promise, neuromorphic computing faces several significant challenges, a significant one being the inherent complexity of the human brain. Replicating even a fraction of its functionality is a monumental task, it requires a deep understanding of its internal mechanisms, many of which are not yet fully understood.

Integrating these systems into practical applications is challenging. While neuromorphic chips and algorithms show great potential, their incorporation into existing technologies and their scalability remain major challenges. As predicted by Future Trends Forum expert Daniel Granados, the future of computing will be hybrid and different technologies will coexist and communicate with each other. Today’s silicon computing and von Neumann architecture will be complemented by neuromorphic computing, photonic computing and quantum computing.

Finally, work is being done on three fronts: scalability, efficiency and robustness:

- Scalability: current neuromorphic computers are relatively small and are not capable of performing complex tasks on a large scale.

- Efficiency: neuromorphic computers are not yet as efficient as traditional computers.

- Robustness: neuromorphic computers are susceptible to failure, as their components are more sensitive to disturbances than the components of traditional computers.

Advances and emerging applications

Despite the challenges, we are already seeing significant advances in the field. Neuromorphic chips, for example, are finding applications in areas such as robotics, where they enable greater autonomy and learning capabilities. In the field of artificial intelligence, these chips offer new forms of data processing, facilitating tasks such as pattern recognition and real-time decision making.

In particular, Intel has used Loihi to experiment with autonomous learning systems, such as traffic pattern optimization and advanced robotic control, while IBM, has used its neuromorphic chip TrueNorth in applications such as pattern detection in health data and real-time sensor data processing.

Leading technology companies and leading research centers

As mentioned above, Intel, with its neuromorphic chip “Loihi”, has pioneered the development of specific hardware for neuromorphic computing. Loihi is able to simulate the behavior of neurons and synapses in the human brain, providing a platform for research and development of artificial intelligence algorithms. IBM is another major player that we have already mentioned, with its “TrueNorth” chip. This chip is known for its energy efficiency and ability to perform complex data processing operations, making it ideal for applications in artificial intelligence and machine learning. Qualcomm has been exploring the field of neuromorphic computing for more than 10 years. Its focus is on developing systems that can integrate deep learning and neuromorphic processing to improve efficiency and processing power.

In terms of leading research centers, Carver Mead, the pioneer of neuromorphic computing, developed many of his initial ideas at the California Institute of Technology (Caltech). Caltech remains a leading center for research at the intersection of electronic engineering and neuroscience. Besides, the MIT has been a major center for research in artificial intelligence and neuroscience. Its research in neuromorphic computing is focused on developing systems that not only mimic, but also understand the fundamental principles of brain processing. The Max Planck Institute for Neurobiology in Germany is known for its work in neuroscience and has been involved in research projects that seek to integrate neurobiological findings into neuromorphic computing. Finally, Stanford University has made significant contributions to the field, especially in the development of brain-inspired algorithms and computational models.

The future of neuromorphic computing

Looking forward, neuromorphic computing is emerging as a key element in the next generation of intelligent technologies. Its development is expected to improve the efficiency and capability of today’s machines, and to open the door to new forms of human-machine interaction, and even to create systems capable of learning and adapting in a similar way to humans.

Impact on society and industry

The potential impact of neuromorphic computing is immense: in industry, it could lead to greater automation and smarter systems, from manufacturing to services. Neuromorphic computing for example enables robots to process sensory information more efficiently, improving their ability to navigate and interact with complex environments, and could be used for industrial inspection and exploration tasks in environments inaccessible to humans. Furthermore, neuromorphic systems are significantly improving computer vision capabilities, enabling machines to process and understand images and videos more efficiently. This has applications in security, where they are used for real-time detection and analysis of activities. In society, it could transform the way we interact with technology, making interfaces more intuitive and personalized.

Conclusion: Towards a future inspired by the human brain

Neuromorphic computing represents an exciting fusion of computer science with knowledge of the human brain. Although it is still in its early stages and faces significant challenges, its potential to transform both technology and our understanding of the brain is immense. With each step forward, we move closer to a future where technology not only mimics, but also learns from nature in an efficient and adaptive manner.