AI-generated summary

This article explores the evolving field of Embodied AI, where artificial intelligence moves beyond cloud-based processing to physical robots capable of learning and adapting through interaction with their environments. At the Future Trends Forum, expert Dario Floreano highlighted how current AI often relies on pre-trained models and scripts, limiting adaptability. Advances in reinforcement learning and large-scale simulators now allow robots to train extensively in virtual environments before operating in the real world, dramatically improving efficiency. Simulators also enable optimization of robot design, potentially leading to novel forms of locomotion inspired by evolutionary processes. Floreano’s work focuses on bio-inspired robots that mimic natural organisms’ movements, such as flexible-wing drones and soft robots, addressing the limitations of mathematical models in capturing complex biological dynamics.

Looking ahead, Floreano envisions robots that evolve autonomously by experimenting with physical configurations, akin to biological evolution, though current simulators must improve to fully replicate natural behaviors. He questions the feasibility of a universal “RobotGPT”—an AI generating autonomous robotic behaviors similar to how ChatGPT produces text—citing challenges due to the complexity of physical interactions. Progress has been made using large language models for control code generation and vision-language-action models for task execution, but these rely on existing data and do not create genuinely new skills. The article concludes that specialized AI systems tailored to different robots may emerge, but fully autonomous skill learning in robots remains an ambitious goal still to be achieved.

Artificial intelligence is no longer confined to the cloud. Dario Floreano explores the future of Embodied AI, bio-inspired robotics, and the key challenges in achieving robotic autonomy

This article has been translated using artificial intelligence

Artificial intelligence is evolving beyond cloud-based data processing. It is now entering the physical world through autonomous robots that learn from and adapt to their environments. This transition was the focus of the latest edition of the Future Trends Forum (FTF) by the Fundación Innovación Bankinter, where international experts examined the challenges and opportunities of Embodied AI. One of the key contributors to this discussion was Dario Floreano, Professor and Director of the Laboratory of Intelligent Systems at EPFL, and a pioneer in the development of bio-inspired robots.

You can watch Dario Floreano’s full talk at the Future Trends Forum here:

From Simulation to Reality: AI in Physical Bodies

Floreano emphasizes that many current AI systems rely on pre-trained models running in the cloud and operate based on pre-programmed scripts, which limits their ability to adapt. To achieve true robotic intelligence, these systems must learn from their environment through trial and error.

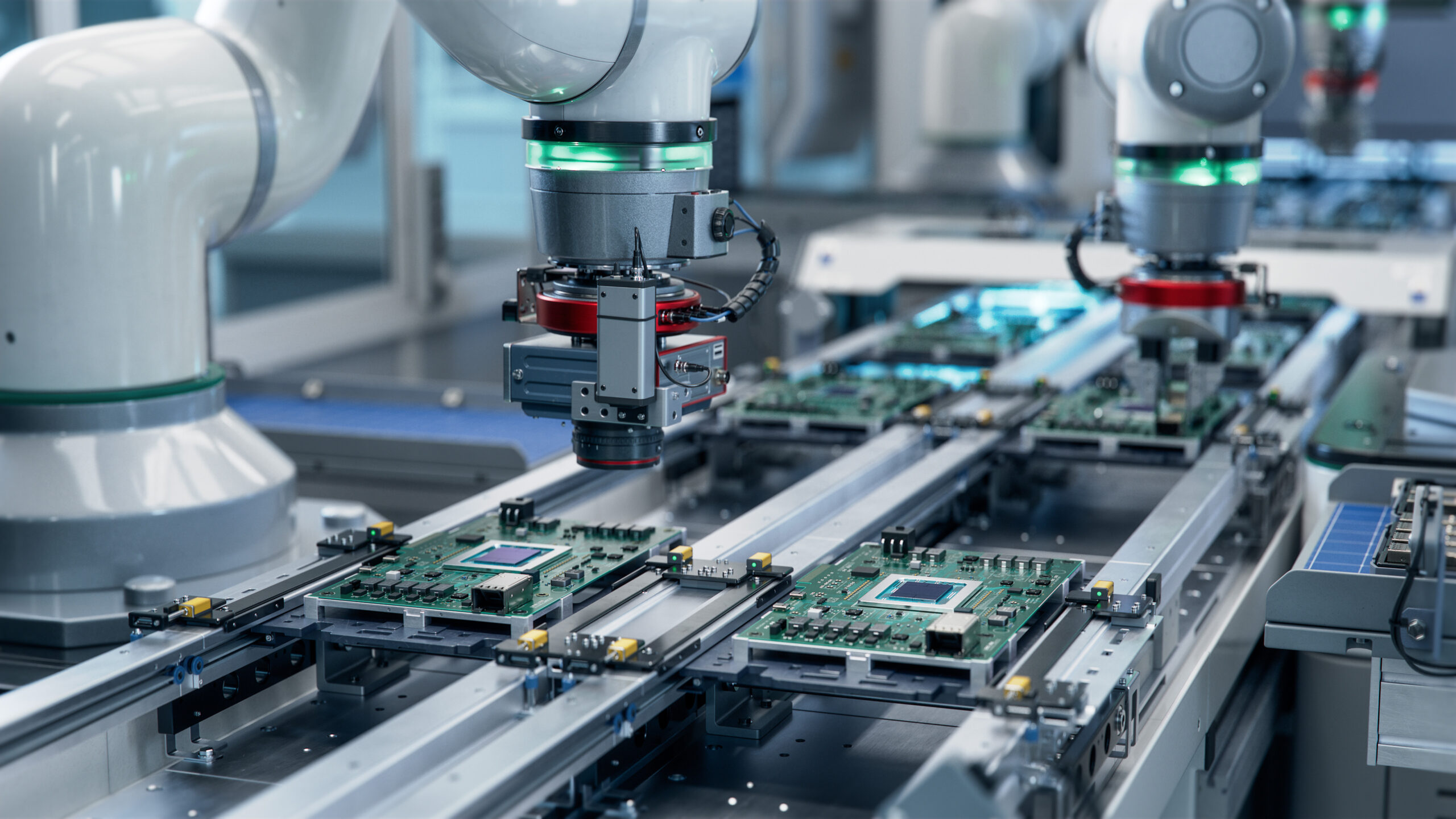

This approach is not new. Reinforcement learning has been used in robotics for over three decades, but a major breakthrough in recent years has been the development of large-scale simulators. Today, it is possible to train robots in highly realistic virtual environments before deploying them in the physical world.

Simulation: The New Lab for Embodied AI

Training AI models in the real world has traditionally been costly and complex. A physical robot must deal with unpredictable terrain, unexpected obstacles, or weather variations—making experimentation slow, expensive, and sometimes unsafe. This is where advanced simulators come into play.

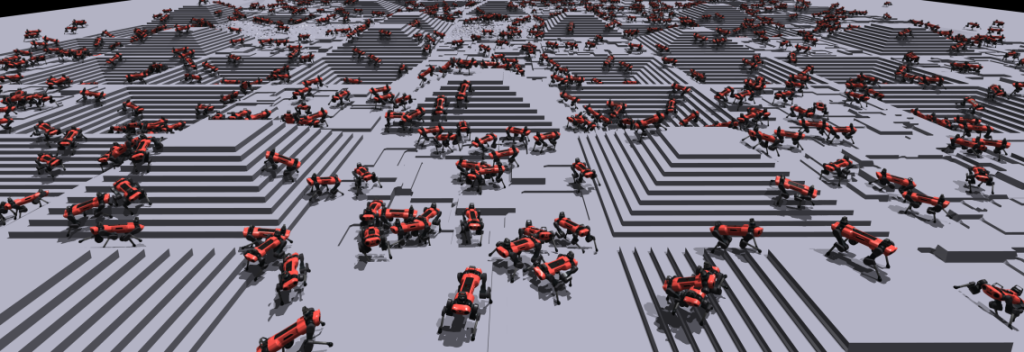

These digital environments allow a robot to practice millions of times under controlled conditions before facing real-world scenarios. Floreano notes that his colleague Marco Hutter, at ETH Zurich, has developed simulators that enable robots to learn complex tasks in parallel, leading to significant improvements in efficiency and autonomy.

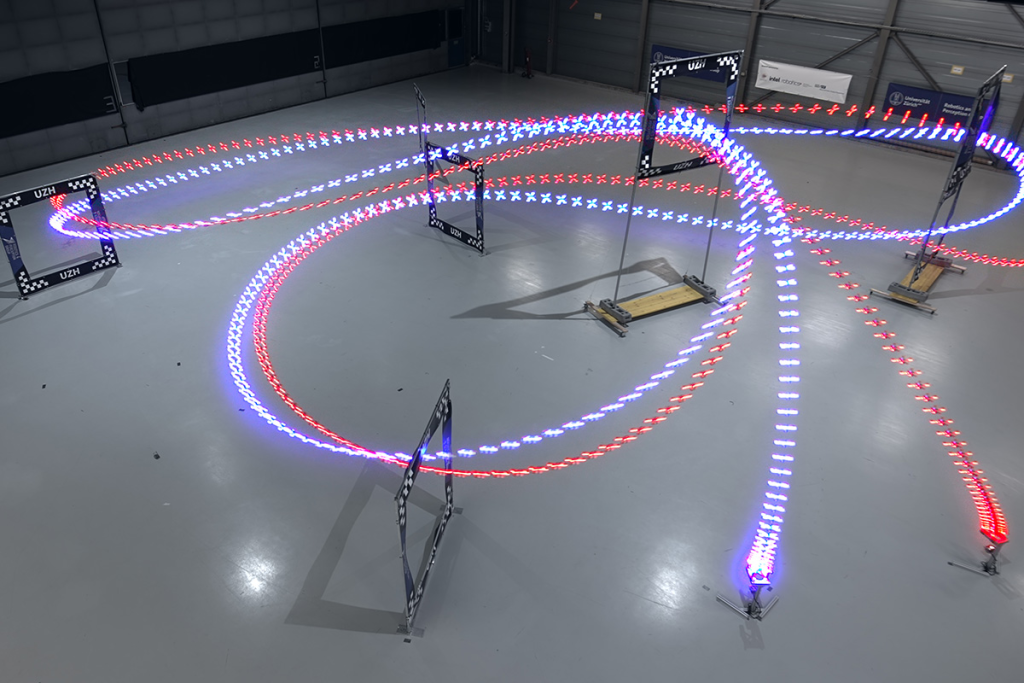

Recent examples have demonstrated how effective this approach can be. In the field of autonomous drones, those trained in simulators have already surpassed human pilots in racing competitions. These achievements are made possible by transfer learning techniques, where models trained in virtual environments apply their knowledge in the real world with minimal manual adjustments.

Simulators have also accelerated progress in quadruped robotics, enabling robots to walk or run over uneven terrain without human intervention. Companies like Boston Dynamics have refined this method, developing robots like Spot that move smoothly through industrial and rescue environments without relying on rigid programming.

Beyond Training: Simulation for Robotic Design Optimization

Floreano also highlights that simulators are not just for training. They are becoming critical tools for optimizing a robot’s physical design. Instead of building costly prototypes, researchers can test various configurations in virtual environments—tweaking factors like stability, energy consumption, or responsiveness to unexpected conditions.

This paves the way for a future where robots are not manually designed, but instead evolve through simulation cycles, discovering the most efficient structures for each task. Could an algorithm develop new forms of locomotion that humans haven’t imagined yet? Floreano believes we are getting closer to that possibility.

Bio-Inspired Robots: Nature as a Model

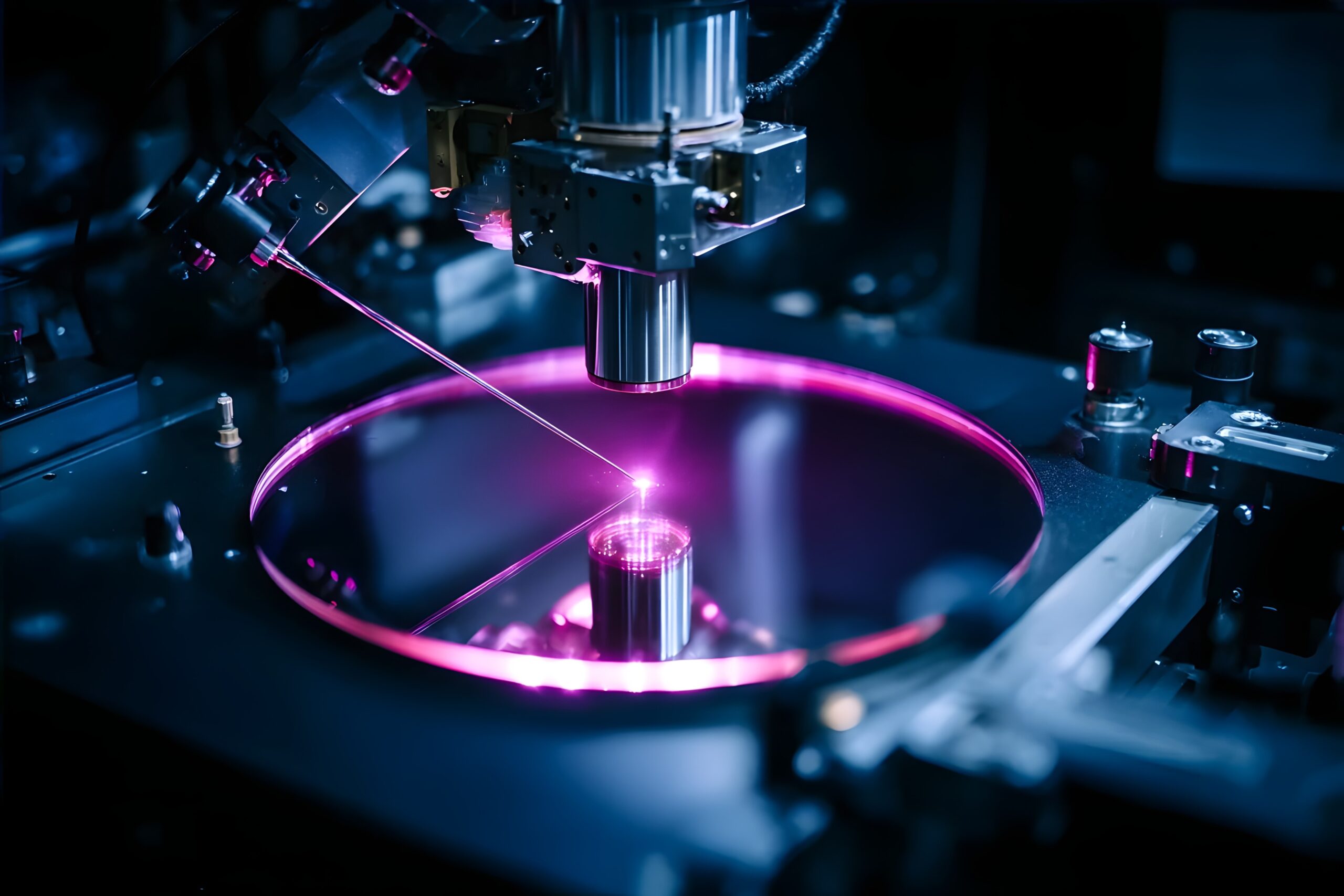

One of the areas where Dario Floreano is internationally recognized is in the development of bio-inspired robots—systems that replicate the behavior and structure of living organisms to operate more effectively in the physical world. His approach stems from a key observation: current mathematical models still can’t fully predict certain natural movements, such as bird flight or insect locomotion. The solution, he argues, is to build better robotic bodies that mimic nature.

Beyond Mathematical Models: Learning from Biology

Although biomechanics and aerodynamics have advanced greatly, we still don’t fully understand how some organisms interact with their environments. For example, conventional drones rely on fixed wings or rotors, whereas birds and bats use a combination of flexible wings and coordinated motion to maneuver with high precision in tight spaces.

To better understand these dynamics, researchers in Floreano’s lab have taken an innovative approach: building robots that replicate biological structures and testing them under real-world conditions. Rather than relying solely on simulations, they use wind tunnels or natural environments to observe how these prototypes interact with airflows and optimize their design accordingly.

Examples of Bio-Inspired Robotics in Action

Floreano’s lab has developed several robotic systems based on these principles. Examples include:

- Flexible-wing drones: Unlike traditional quadcopters, these drones adjust wing shape and position in real time, improving stability in strong winds.

- Soft robots: Inspired by octopuses and worms, these robots have flexible bodies that adapt to irregular terrain and move without rigid structures.

- Hybrid systems: Combining dynamic materials with advanced sensors, these robots can change shape depending on their surroundings.

These innovations enable greater energy efficiency, better adaptability to complex environments, and more precise execution of tasks compared to traditional rigid robots.

What’s Next? Evolutionary Optimization in Robotics

Floreano believes the next step in bio-inspired robotics will be to develop robots that evolve and optimize themselves. Instead of relying on predefined models, future robots could experiment with different physical configurations and learn which are most effective. This approach—akin to biological evolution—could give rise to new forms of movement and manipulation that we have yet to conceive.

But this path is not without challenges. Current simulators are still not advanced enough to replicate the complex behaviors of biological systems with high accuracy. According to Floreano, the key lies in combining real-world experiments with AI models capable of interpreting physical feedback and generating new solutions.

Toward a “RobotGPT”? The Future of Embodied AI

Dario Floreano poses a key question: “Can we build a RobotGPT—an AI capable of generating autonomous behaviors in robots the same way ChatGPT generates text?” In theory, robotic behavior can be understood as a sequence of motor and sensory commands, much like how words form sentences in a logical structure. However, generating autonomous behavior in physical robots remains a far more complex challenge than generating text.

AI Models in Robotics: Progress and Current Limitations

In recent years, two important advances have shaped this field:

- Using Large Language Models (LLMs) to generate robot control programs: Today, LLMs can search the internet for existing code fragments and combine them to create control commands for drones or robotic arms. For example, if a user prompts, “fly north,” the model can locate and adapt relevant code. However, this approach still depends on pre-existing code and does not generate genuinely new behaviors.

- Vision-Language-Action (VLA) Models: A step forward with limitations: These models combine image processing, natural language understanding, and control commands. For example, if the system sees a bowl of fruit and is instructed to “place the strawberry in the cup,” the robot can translate that into a sequence of actions. Yet, as Floreano notes, these models do not create new skills—they generalize from a database of pre-programmed behaviors.

The Real Challenge: Creating New Robotic Skills

The core problem is that physical robots cannot rely solely on pre-existing data or simulations. Floreano explains that, unlike language—which is relatively static and predictable—the physical world involves dynamic forces like gravity, friction, and air resistance. For instance, a quadruped robot cannot be programmed with fixed equations for all possible situations; it must learn through trial and error in real environments.

This is where reinforcement learning and advanced simulators come into play. Until recently, robotics faced a major bottleneck because each learning cycle required real-world experimentation. But with modern simulators—such as those developed by Marco Hutter at ETH Zurich—robots can now train virtually and transfer that knowledge to the real world. One example is the first autonomous drone to defeat human pilots in racing competitions, a milestone made possible through simulation-based training.

Source: Challenge Accepted: High-speed AI Drone Overtakes World-Champion Drone Racers

Floreano also showcases the use of massively parallel deep reinforcement learning to teach robots to walk in minutes—a method that dramatically accelerates robotic learning.

Source: Learning to Walk in Minutes Using Massively Parallel Deep Reinforcement Learning

Will There Be a Universal RobotGPT?

Floreano is skeptical about the emergence of a single, universal AI model for robots. Instead, he envisions a family of specialized AIs, each tailored to different types of robots:

- VLA models for robotic arms, optimized for object manipulation

- Biomechanics-based models for quadrupeds, focused on locomotion

- AIs for drones, combining aerodynamics with advanced sensing systems

The ultimate challenge remains: developing AI that can truly learn new skills without human intervention—a milestone that still lies ahead.

More articles in this series on Embodied AI:

- Embodied AI: Artificial Intelligence Beyond the Digital World – by Jeremy Kahn

- Embodied AI and the Limits of Consciousness: Antonio Damasio’s Perspective

Founder of NCCR Robotics